Where Physical and Digital Worlds Collide

In this blog for Data-Centric Engineering, Paul Clarke (Chief Technology Officer at Ocado) documents Ocado’s journey with building synthetic models of its business, its platforms and its underlying technologies, including the use of simulations, emulations, visualisations and digital twins. He explores the potential benefits of digital twins, including the opportunities for creating digital twins at a national scale.

(Long-read; 6,060 words. All images produced by Ocado)

Impact Statement:

Synthetic environments in the form of simulations, emulations, visualisations and digital twins, offer numerous benefits at a company, country and even planetary scale. They can enable faster, safer and cheaper innovation; they can enable machine learning models to explore designs and optimisations that are beyond our human minds; they can enable us to make better use of scarce resources such as time, energy, land, transportation network bandwidth and many others; they can help us explore solutions to major societal challenges.

The concept of creating digital twins at a national or even planetary scale is truly ambitious. The only way this could be achieved is by establishing common frameworks, standards and interfaces to enable the component digital twins to be crowdsourced, and then connected together via data marts and other smart glue. Given the scale of the challenges we now face, such as climate change, poverty and pollution, this is a moonshot we should give serious consideration to.

1. Our Simulation Journey Begins

In February 2006 I stepped into Ocado’s Customer Fulfilment Centre (CFC) in Hatfield for the first time. I was completely blown away by the Aladdin’s Cave of technology that greeted me there. Thousands of plastic crates travelling around many kilometres of conveyors with all manner of cranes, shuttles and other machines whizzing around under software control. Ocado had been founded in April 2000, and this was their first automated warehouse which had been shipping customer grocery orders since September 2002.

That warehouse tour turned out to be the first step in a rather unusual job interview. Later that day I had a chat with the individual who led the software teams who were building the CFC control systems. I had started my career writing discrete event simulations for defence systems and so naturally my first question was: “You did simulate this CFC before you built it didn’t you”; the answer was no, they hadn’t.

Three months later I joined to do a one year consultancy project with the expectation that I would then go off and do another startup. However that was the start of a love affair and like many love affairs, they get the better of you and your best laid plans go out of the window. And so it was with me and so after an extraordinary 14 year rollercoaster ride, I am still there.

Even though the Hatfield CFC (we refer to it as CFC1) was at that stage just a shadow of its current self, it was still a massively complex and impressive facility. The founding team had managed to design it and get it live armed only with a few spreadsheet based models, which was no mean feat. However it was clear that it would be going through significant further evolution and growth, and that Ocado had plans to build many such CFCs around the UK.

So I was convinced that we needed a simulation to test these future designs, to test new control algorithms, to optimise the processes and flows and to model future CFC designs before we built them. And so it was that alongside my day job, I started to hatch a plan to build an end-to-end simulation of CFC1.

The first step was to review all the leading third-party simulation frameworks we might use to construct such a model. Almost all of these frameworks had come from the automotive industry where they were used to model a few hundred assets on the move at any one time. We however had thousands of crates traversing a maze of conveyors with the topological complexity of the road network for a small city. Then we had all the other actors that would need to be modelled such as people, cranes, forklift trucks, goods to man machines and many others. So my conclusion was that we had no option but to build our own simulation framework from the ground up. This was inline with Ocado’s strategy which was to build almost all of its technology in house.

The first person that I hired into Ocado, Rob Stadie, who had been writing simulations and control systems for defence aerospace systems. I set him the challenge of creating this simulation framework and then using it to build a simulation of the outbound side of CFC1. And so it was that our simulation team was born.

2. CFC1

To understand the task of simulating CFC1, you need to understand a little bit about its layout and operation.

A typical Ocado customer order is for 50 items picked from a range of 55,000 different products. The warehouse is split into four temperature zones – ambient, produce, chilled and frozen. The customer orders are downloaded to the warehouse and the warehouse management system splits each order into a list of items to be picked and a set of plastic crates – we call them totes – into which they will be packed.

Each tote contains three carrier bags. The items in the order are allocated to a specific carrier bag in a specific tote in a specific temperature zone. The allocation of items to the different bags, the sequence in which those items will be picked and packed and their 3D orientation, are optimised against a set of soft and hard constraints. For example, making sure that the heavy tins are packed into a bag first and that the eggs and soft fruit are packed in last.

The picking of the higher velocity products (i.e. the ones we sell more of) takes place in pick aisles and CFC1 contains 28 of them. Then there are other “goods to man” machines for picking the slower moving items. Each pick aisle is about the length of a football pitch, typically has 6-9 people working in it and has the throughput of a large conventional supermarket.

On one side of the pick aisle are a series of pick stations, typically 12, spaced evenly along its length. On either side of each pick station, and on the other side of the aisle opposite the pick stations, products are arrayed in containers of different sizes (crates, trays or pallets) in pick locations. The pick locations are replenished by cranes that run in their own aisles on either side of the pick aisles. The cranes transfer the containers of product from storage locations to their pick locations and collect the empty containers for reuse.

Fig. 1. A pick aisle inside the Hatfield CFC

The totes then travel around the network of 32 km of conveyors, visiting those pick aisles which contain one or more of the items that it needs to collect. When a tote reaches a junction in the conveyor network, a barcode reader scans a barcode on the tote to identify it and then it is routed to the required output branch of the junction.

Having entered an aisle, the totes travel to the appropriate pick stations where the combination of a computer screen and lights direct personal shoppers to pick the required products from the pick locations into the correct carrier bag in the correct sequence; each item is verified before it is packed by scanning its barcode.

In this way, the totes fly around the network of conveyors, picking up their items at pick stations, like a carefully choreographed and optimised scavenger hunt! Once they have collected all their items, the totes are then sent to the dispatch area where they are loaded into roll cages and then into delivery vans. The carrier bags of groceries are then delivered to customers’ kitchen tables in one hour delivery slots.

This is a massively simplified description of a very complex and highly optimised end-to-end system where fractions of a second count.

3. Our Simulation Environment

The first step was to create a simple discrete event simulation framework that could simulate a single pick aisle. Based on his previous defence work, Rob’s language of choice for building the simulation framework would have been C++ but Ocado was already established as a Java house and so Java it was.

The pick aisle was broken down into its elemental components – conveyor straights and corners, conveyor junctions and merges, pick stations, pick locations, barcode readers and so on. Following a normal object oriented design approach, a digital simulation component was developed for each physical component and the digital components were assembled into a simulation model of the complete aisle.

The next step was to calibrate the model. This required Rob to spend many hours in CFC1 gathering dimensions, timings and other vital statistics from the physical pick aisle we had selected as the proof of concept. These data were then stored in a database and loaded by the model during initialisation.

As well as the digital representations of the physical components, a number of statistical models had to be developed to represent processes such as the time taken to pick a given product or the probability of exception conditions such as barcode scanner at a junction not being able to scan the barcode on a tote, resulting in it being routed to the junction’s default branch.

The simulation model of the pick aisle was then validated against the real pick aisle using the data logs from its control systems. Initially the model was just of the flows of totes around the aisle but then the picking and other processes were integrated.

At this point I think it would be fair to say that Ocado’s exec board thought the whole project was slightly far fetched and unlikely to yield any useful results; so as a project it was probably being humoured rather than sponsored…

When the shiny new simulation was validated, we made some predictions on future performance characteristics which turned out to be horribly wrong for reasons that I honestly cannot remember! That was nearly a fatal blow to “simulation @ Ocado” but Rob found the cause of the problem and the rejigged simulation started to yield meaningful results.

With confidence in the simulation growing, the next step was to start scaling it to model more pick aisles and the conveyors that interconnected them. This was going to require hiring a small team of simulation engineers to work with Rob and that in turn meant we had to pitch the idea of simulating the whole outbound side of the CFC to the exec board. I remember that meeting well and although we did get backing to proceed, I don’t think anyone thought we would actually manage to pull this off.

Over the first 15 years of its life before entering its current maintenance phase, CFC1 has been a living lab. During this period it was constantly evolving whilst at the same time shipping millions of customer orders. Whenever our engineers invented a new better mouse trap, we would unceremoniously rip out the old one and install the new one. We did this because we knew we would be building many more warehouses, even though at the time our sights were just on the UK online grocery market rather than the international platform market that would subsequently emerge. If you know where to look you can spot the scar tissue of these repeated trips to the operating theatre.

So one of the challenges for the simulation team was that the simulation they had to build was inevitably going to be a moving target. This was one of the main considerations that led the team to opt for building a framework that could generate a simulation from a database of CFC specifications rather than hand crafting the simulation. This meant that as the CFC evolved, the team could just update the specifications and regenerate the simulation on demand; that turned out to be an absolutely critical architectural decision in the life of the simulation project.

One could summarise the end-to-end fulfilment process in CFC1 as: Multiplexing customer orders into empty totes that then flew around conveyors on their scavenger hunt, before then being demultiplexed back into completed customer orders that were loaded onto vans for delivery.

This was a massively complex end-to-end process with millions of separate moving parts. The whole system was very highly sensed and we had been living the Internet of Things (IoT) dream long before it was a thing. It was also under precise software control, with hundreds of algorithms and millions of lines of software, all developed in-house. But despite all this investment in making the end-to-end process deterministic, there were within it multiple sources of randomness. For example, variation in the friction on conveyors causing the crates to slip slightly, variation in the performance of different personal shoppers and the timing of their toilet breaks, mechanical faults resulting in delays or diversions and so on.

Creating a high fidelity simulation required developing accurate statistical models for all these sources of randomness. This randomness also meant that if you ran the simulation multiple times with the same set of initial starting conditions and the same set of customer orders, you would inevitably get slightly different results. Assuming the simulation was accurate and calibrated, these varying results generated an envelope or distribution of possible outcomes that the actual observed results would lie within. This distribution was elaborated by typically running the simulation hundreds of times with the same data.

To speed up these Monte Carlo studies, the simulation was run multiple times faster than real time and in parallel on multiple servers. A future evolution of the simulation framework would enable these simulation jobs to be run across a farm of on-premise simulation servers and then use public cloud instances to flex capacity as required; this enhancement also automated the analysis of the simulation results

The simulation allowed us to test new control algorithms, new potential conveyor topologies and new mousetraps before we built them. But we also wanted a way to test production software before deploying it in the real warehouse and this meant evolving our simulation into a higher fidelity emulation of the actual hardware. To support this, the simulation framework was adapted to support the same interfaces as the real hardware, which enabled the production software to be run on it without modification. When in emulation mode, the simulation framework had to be run in real time in order to generate a realistic timing environment for the production software.

The biggest challenge was that the control systems had never been written with emulation in mind. This meant that emulating their complete operating environment was a very complex and compute intensive task. Once the benefits of emulation had been proven, future versions of the control systems would be architected to be “emulation first”. This dramatically improved the performance of the emulations, for example allowing many instances to be run on a normal desktop whilst an engineer was at lunch.

Ocado already had a rich history of stealth projects. Someone would have an idea that they were excited about and then go off and develop it, often in their own time or under the radar on the back of another business sponsored project. Well one such stealth project was developing a visualisation of the warehouse. This was a wire frame 3D representation of all the important features and actors within the warehouse, such as conveyors, pick stations, pick aisles, totes, personal shoppers etc, but with the walls and floors taken out.

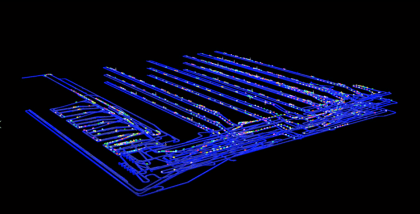

Fig. 2. Visualisation of Hatfield CFC digital twin

This visualisation could be used to playback the simulation and emulation results but it could also be used to play back data from the actual warehouse. This enabled us to ask questions such as what was happening at a specific conveyor junction at 02:30 on a given day and then to play it forwards or backwards from that moment as required. It also enabled you to see the wood for the trees, for example by asking the visualisation to remove all totes that did not meet certain criteria, to colour code the remaining ones based on their exception conditions and so on.

Although this visualisation started life as a stealth project it soon became an essential diagnostic tool within the control room of the live warehouse. The configuration of the visualisation was done using Iron Python scripts. One day I found out that our CEO, Tim Steiner, had asked for a copy of the visualisation to be setup in his office and that he had subsequently taught himself to run the configuration scripts; it was at that point that I knew that the visualisation project had properly emerged from stealth mode!

In late 2006, I had been seconded to the team that were designing Ocado’s second warehouse (CFC2). However a slowdown in sales growth on the back of the 2008 recession meant that additional warehouse capacity was not required and so the design for CFC2 got mothballed. Then in January 2010, the need for additional capacity was back on the agenda again and so the design for CFC2 was dusted off and updated. By this time we were in a position to build a simulation of this design using our shiny new simulation framework. The simulation was built and the design for CFC2 was validated using operational data from CFC1.

CFC2, which was constructed in Dordon in the Midlands, went live in February 2013 and the only problem was that the ramp-up schedule turned out to be overly conservative and had to be revised. The very smooth and fast ramp-up of CFC2 was a testament to all the effort that had gone into simulation and software testing.

4. Birth of the Digital Twin

The term “Digital Twin” is now very much in the Zeitgeist. However unfortunately the term has been completely hijacked and is being used to describe everything from a static database that describes a physical asset to a high fidelity simulation that is conjoined with its physical twin; it is this latter definition that is what I mean by a digital twin.

For example, imagine you wanted to optimise traffic flow around a city such as London. The road network and the traffic on it is your physical twin. You would probably put cameras and sensors at junctions to record the movement of traffic. You would then feed these data into a digital model or simulation of the traffic network. You could then use this model to optimise the topology of the road network, timing of traffic lights and so on. You can then feed those outcomes back into the physical twin, for example using your simulation results to update the settings of the actual traffic lights.

This was the next stage in the evolution of our simulation/ emulation framework. We would feed the data from the real warehouses, CFC1 and CFC2, into their simulations. We would then use the simulations to run what-if studies to optimise the control parameters of the physical warehouses. We would then upload the optimised control parameters into the control systems of the physical warehouses, thus creating conjoined digital and physical twins.

In July 2013 we signed a deal with Morrisons to put their grocery business online using our end-to-end platform and the new service had to go live in January 2014. It was clear that implementing this new service would require massive technological development and business development programmes. This was to include giving Morrisons roughly 50% of the storage and operating capacity of CFC2, and all these changes had to be simulated.

Our founding vision had been to use a huge amount of technology and automation to do online grocery scalably, sustainably and profitably and we had achieved that. It was also part of that founding vision that once we had evolved and proven a solution for ourselves, that we would then make this solution available to other retailers. Morrisons was the first such retailer but our aspirations were worldwide.

The Morrisons online grocery service was implemented using the software and hardware platform that we had built and operated for ourselves over the proceeding 12 years. However this platform had never been designed to scale to support multiple retailers across multiple continents. And so it was that having put Morrisons online grocery service live on 10 January 2014, I gathered everyone from Ocado Technology into a hotel conference room and told them that we were going to rewrite the whole platform from scratch. This was the start of a massively ambitious and complex project to develop our Ocado Smart Platform.

Ocado’s first two warehouses were, and still are, amongst the largest, most complex and most sophisticated automated warehouses for online grocery in the world. The nature of their design and the underlying technology used to construct them, meant that you had to build most of the warehouse to deliver even one customer order. This was fine in the UK, one of the most mature and evolved markets for online grocery in the world, but would not be suitable in other markets that were less evolved.

Then there was the fact that the time to pick a customer order was anything up to 2.5 hours. This was fine for next day or even same day orders but the writing was already on the wall for immediacy – customers were going to want to place orders for delivery within the hour. The combination of these factors and many others, meant that we needed a new technology to construct the automated warehouses for our future Ocado Smart Platform customers. The concept we opted for was to use swarms of identical robots to assemble our customers orders.

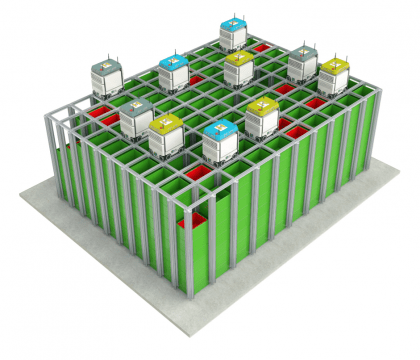

Imagine a giant chess board and on that chess board there are robots that like rooks can move along rows and columns. Under every chess square is a stack of storage bins containing groceries, up to 21 bins deep. The robots can stop on a square, lower a grab, pick up the bin from the top of the stack, bring it up into the body of the robot, then move to another square and deposit the bin. Or they can bring the bins to pick stations, where a human or indeed another type of robot, can pick groceries from a bin into a customer order or to decant stations where products arriving from suppliers on pallets are deposited into bins and then into the grid.

We call these hives and our second generation warehouses typically contain two of them – one for ambient products and one for chilled; frozen products are handled via a different process. These hives can be multiple times the size of a football pitch with thousands of robots swarming around them. The robots collaborate with one another, enabling us to pick a typical 50 item order in around five minutes which is a key ingredient in the recipe for immediacy. For example if a robot wants to get a bin that’s fourth down in a stack then it just gets three of its friends to move the top three bins out of the way, so that it can grab the one it wants.

Fig. 3. Schematic of swarm robotics hive

Before developing this swarm robotics technology, the first step (after filing some patents) was to build a simulation of a warehouse using this technology. This simulation was used to prove the underlying concepts but also to come up with a physics model for the robot that would have to be developed. This technology went live in our Andover CFC in August 2016 and in our warehouse in Erith in SE London in June 2018.

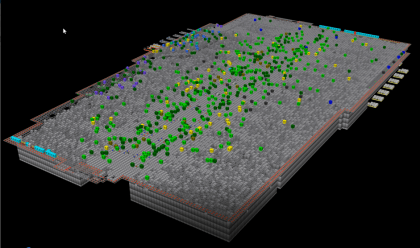

Fig. 4. Visualisation of Erith CFC digital twin

As with our first two warehouses, the simulation of these robot swarms has evolved into a digital twin. The robots generate 5000 data points 1000 times a second. That’s about 1Gb of data per robot per day or about 4Tb of data per day for swarms of 3,500 robots in just one CFC. There is no way that human engineers staring at screens could oversee, let alone optimise, a system of that complexity in near real time – it is completely beyond human scale.

So we stream all those data to the cloud where an machine learning (ML) based healthcare system uses predictive maintenance to keep every member of the swarm in tip top shape, spotting the onset of problems before they become problems. This is directly analogous to what can hopefully be done in terms of remote medicine for humans using patient records, data from wearables and other sensors in the home. However what’s special about a swarm of identical robots is that when one comes into the pits for an inspection or service, another one just takes its place.

These data are also fed into our digital twin of the robotic hive. This digital twin optimises the behaviours of the swarm and then updates the operating parameters of the real-time control system that manages the hive. This is an example of one of the most exciting applications of digital twins, namely the ability to connect machine learning models to them in order to discover designs and optimisations that are beyond what humans could conceive of.

Other applications for the simulation framework started to emerge, which lead us to open source it, initially within Ocado Technology but subsequently on GitHub for public use. For example building a simulation of our demand forecasting system that uses an ensemble of many different forecasting engines, over 60% of which are now ML based, to predict the demand for the 55,000 different products we sell. These engines are looking for the sweet spot that maximises the availability and freshness of products for our customers whilst minimising the waste and required stock cover.

We also simulate the thousands of routes that our delivery vans drive each day in order to try out new routing algorithms. These simulations are stops on a much bigger journey to build an end-to-end simulation of our entire business and our platforms. So maybe one day we will release SIM Ocado as an online game!

Based on the deals already signed for our Ocado Smart Platform, we will be building over 30 CFCs using this swarm robotics technology around the world over the next three years. The designs of these warehouses need to be individually simulated before construction and this has led to the development of design tools that enable non-simulation engineers to layout the designs and then test them. As another stealth project, we built augmented reality (AR) into the visualisations of these designs, enabling you to fly through these simulated environments using AR headsets.

5. From Groceries to Atoms

One way to view Ocado’s history is that we have been on a 18 year shopping trip for innovation assets – data, intellectual property, know how, technologies and competencies – using online grocery as the vehicle for that trip.

Of these, the competencies are particularly valuable because although they take time to acquire, they are a source of competitive advantage and once acquired, can be used in lots of different places. For example, once you have acquired the competency of using natural language processing to enable your customers to place and manage orders using voice, you can also use that same competency to enable an engineer to talk to a robot, to create a chatbot that can talk to your customers and so on. Some of our key core competencies include simulation/ digital twins, Artificial Intelligence (AI)/ ML, optimisation, motion control, IoT, cloud computing, robotics and disruptive innovation itself. Of these, simulation/ digital twins is one of our deepest and most differentiating competencies.

The reality is that the innovation assets we have collected know very little about groceries because they don’t need to. Groceries are just atoms with certain properties. They are actually quite troublesome atoms because they have to be kept at the right temperature, they are irregularly shaped, they can crush one another if you pack them in the wrong order, they can have short shelf lives and so on. This means if you can manage food atoms you can manage other types of atoms. This in turn means we can take our innovation assets and use them to disrupt all sorts of other sectors, some of which are very far from online grocery.

Some of these spinout applications of our innovation assets that we are working on include: vertical farming, massively dense automated car parks, baggage and freight handling for airports, parcel sortation, rail freight and container ports. The recipe for forming these spinout ventures is to form partnerships that combine our areas of expertise (e.g. AI/ ML, digital twins, robotics, dexterous manipulation etc) with expertise and domain knowledge for the target application that the partner brings.

When exploring these new ventures, the first thing we do is file some patents and the second thing is to build a simulation of the target application. We use simulation as a tool to help power and accelerate our journey of innovation, exploration, self-disruption and reinvention.

6. Thinking Bigger

Ocado is a great example of how some of the most exciting applications of AI/ ML and robotics lie where these digital and physical worlds collide. Digital twins are the third member of this disruptive family of technologies and IoT is another one.

Digital twins enable you to explore the physical world faster, cheaper and with less risk. As mentioned earlier, digital twins enable ML models to explore and optimise digital worlds to find solutions that are beyond human scale.

Digital twins enable you to stress test your systems by subjecting them to potential perturbations and exceptions. For example, a digital twin of a city that included models of the emergency services and hospitals might allow you to explore the impact of a plane crash or a terrorist attack.

After an accident on a motorway, it’s common for waves of congestion to still persist even when the accident has been dealt with. This is why rather than just reopening the motorway and allowing cars to simply drive off at full speed, the police will often drive in front of the cars and slowly accelerate in order to prevent standing waves of congestion from being established. Similarly, digital twins can help us find ways to return a perturbed system back to its steady state

Digital twins can help us answer “what if” questions about the future such as the potential impact of climate change, disruptive changes to systems such as the impact of local energy generation and electric vehicles on the national grid, the impact of new policies or regulatory frameworks and so on.

You can explore a lot using simulations but eventually you need to start experimenting in the real world. This is where living labs come in. They provide a physical environment that is representative of the real world but initially more constrained. You can then loosen those constraints over time as your capabilities and confidence grows. Living labs are not technology demonstrators, they are about “learning by doing”, using experiments to deliver real services to real customers – both commercial and consumer.

Why? Because customers keep you honest, they give you feedback, they help drive innovation, pace and agility. Digital twins continue to provide value as part of a living lab, allowing you to evaluate new ideas before you try them in the lab.

Along with our consortium partners, we are working to establish a living lab in Hertfordshire to explore the intersection of autonomous vehicles, drones, robots, smart infrastructure and smart services. The first thing we will do is of course to build a simulation of the living lab which will evolve into a digital twin.

Ocado operates at this intersection of AI/ ML, robotics, digital twins and living labs – building smart mobile machines, plugged into the world around them with IoT, streaming their data exhaust to the cloud and using digital twins and living labs to help design and optimise these complex ecosystems.

The UK is competing on a world stage with some countries that have much greater resources than we do. So we will need to find ways to play the innovation game much smarter, with greater leverage and more selectively – we need to find the asymmetric warfare model of innovation. Digital twins are just one example of ways in which the UK could work smarter in order to increase its competitive advantage.

7. National Digital Twin

Thinking about digital twins at a national scale leads to the vision of creating a National Digital Twin (NDT) of the UK. This is a truly ambitious vision that some might dismiss as unachievable, but then most people would have dismissed something like Google Street View as unachievable until it was done.

No one company could build the NDT and indeed no one company should own it – it would become a new piece of national infrastructure. The only way one could create such an NDT is to establish the common frameworks, standards and interfaces to enable the thousands of component digital twins to be crowdsourced, and then connected together via data marts. These commons need to be open sourced or owned by national government.

The component digital twins will inevitably be built using different technologies and at different levels of fidelity and abstraction. They will need to be joined up horizontally such as transportation, smart cities and energy. They will also need to be joined up vertically such the digital twins of individual drones, an automated air traffic control system and the wider air transportation system. There is research that needs to be undertaken on the science of digital twins such as how best to couple them given different levels of abstraction, fidelity and latency.

One of the challenges facing the creation of data marts and data trusts is how to manage the data that won’t be open and free of charge. How to handle the fact that companies might be willing to share datasets for the greater good but not to have them weaponised by their competitors. This all leads to the concept of data passports to control whom the data can be shared with and for what purposes.

The same challenge will go for digital twins when we start to share and conjoin them. For example the Met Office might be willing to share a low fidelity weather simulation as part of a smart city digital twin but they are not going to give away their high fidelity commercial weather forecasting model for free, even assuming you had the supercomputer to run it on!

There would be significant security considerations associated with creating an NDT, especially if and when the underlying digital twins are coupled to the respective control systems for their physical twins. This would effectively be creating a country scale operating system.

Building an NDT is a task that would never ever be completed – there would always be more elements and higher levels of fidelity to model. However the good news is that each component digital twin would deliver value in its own right but the interconnection of these digital twins would deliver additional value above and beyond the sum of the value of the component digital twins.

For example, having a digital twin of the UK rail network would deliver benefits such as optimising timetables, minimising delays, derisking the impact of maintenance activities and so on. However when combined with digital twins of the bus, freight and road networks, along with airports and seaports, would deliver insights and optimisations across the transportation network as a whole. Then you might add a digital twin of the energy network to optimise the power distribution required to support the electrification of transport and so on.

We are collaborating with Centre for Digital Built Britain (CDBB) and others on this vision for an NDT. This vision includes digital twins for the built environment such as those mentioned above. It also needs to include digital twins for institutions such as the NHS, national government, our financial system and so on. Then we will want digital twins of aspects of the natural environment such as weather, agriculture, flood prevention and so on.

An NDT could enable us to make better use of scarce resources such as time, energy and land whilst minimising the unintended consequences such as waste and pollution. Indeed one of the most important applications of an NDT would be to help us achieve the UN Sustainable Development Goals.

8. Conclusion

Simulation and digital twins are a potentially hugely powerful catalyst for innovation, exploration and optimisation at both an organisational and country scale.

Some of the benefits of simulation and digital twins include:

- De-risking the physical world before you fund it and build it

- Stress testing your designs for exceptions

- Reducing the cost and impact of maintenance, including the use of predictive maintenance

- Optimising the use of scarce resources

- Faster than real time exploration and innovation

- Modelling developments within their wider ecosystem to make sure they will fit together

- Combining with ML models to achieve beyond human scale designs and optimisations

Opportunities for digital twins at a national scale would include:

- Supporting the implementation of UN Sustainable Development Goals

- Simulation as a step in public funding applications to help demonstrate that the proposals have legs

- Helping drive faster, safer, lower cost and more sustainable development

- Combining digital twins and living labs

- The benefits associated with creating an NDT

Creating an NDT would require:

- Government sponsorship of the NDT as a new critical piece of national infrastructure

- The creation of common standards, interfaces and middleware to enable the crowdsourcing and coupling of digital twins

- Research into the science of digital twins, including how to couple them

- Significant focus on baking in security from the outset

We encourage you to join us in building Data-Centric Engineering as a unique resource for engineers and data scientists, in academia and industry. Read our Call for Papers for more details and follow us on social media, @dce_journal.