The study of emotions started in 1917 with Watson and Morgan hypothesis that fear, rage and love are the most fundamental modes of emotional response (Watson & Morgan, Reference Watson and Morgan1917). Goodenough pioneered in the observation and data collection strategies about young children emotions (Goodenough, Reference Goodenough1931). Previously, Watson and Rayner (Reference Watson and Rayner1920) and Mary Cover Jones (Reference Jones1924) trained emotional reactions in young children (Jones, Reference Jones1924; Watson & Rayner, Reference Watson and Rayner1920). Such advances established the bases for today´s research about emotions.

Currently the research of emotions has shown that successful social interactions need a proper recognition of other people’s emotions (Carton et al., Reference Carton, Kessler and Pape1999) also in children (Wang et al., Reference Wang, Hawk, Tang, Schlegel and Zou2019). Emotion recognition helps us to predict others’ minds, actions or intentions, and guide our own behavior in social interactions (Frith, Reference Frith2009; Izard, Reference Izard2007). This ability emerges early on, so infants within the first half-year of life can discriminate facial expressions (Ruba & Repacholi, Reference Ruba and Repacholi2019). Yet their reading of emotions is improved and refined throughout childhood (Tonks et al., Reference Tonks, Williams, Frampton, Yates and Slater2007) and adolescence (Holodynski & Seeger, Reference Holodynski and Seeger2019; Lawrence et al., Reference Lawrence, Campbell and Skuse2015).

Emotion recognition has been related to long-term functioning, including academic success (Denham et al., Reference Denham, Bassett, Way, Mincic, Zinsser and Graling2012; Izard et al., Reference Izard, Fine, Schultz, Mostow, Ackerman and Youngstrom2001) and social integration (Sette et al., Reference Sette, Spinrad and Baumgartner2017). Alterations of emotion recognition can be related to behavioral problems in children, such us aggressive behaviors or violation of social norms (Wells et al., Reference Wells, Hunnikin, Ash and van Goozen2020), and to higher levels of psychological distress, psychological or somatic symptoms, or depression in early adolescents (Simcock et al., Reference Simcock, McLoughlin, De Regt, Broadhouse, Beaudequin, Lagopoulos and Hermens2020). In addition, people with different developmental disorders such as autism (Golarai et al., Reference Golarai, Grill-Spector and Reiss2006), attention deficit and hyperactivity disorder (Sinzig et al., Reference Sinzig, Morsch and Lehmkuhl2008), psychopathy (Dawel et al., Reference Dawel, O’Kearney, McKone and Palermo2012), or behavioral problems (Cadesky et al., Reference Cadesky, Mota and Schachar2000) have altered emotion recognition. Even before the onset of schizophrenia, high-risk patients present profound deficits in emotion recognition, which suggests that young people with low performance in emotion recognition tasks should be targeted for early identification and intervention (Corcoran et al., Reference Corcoran, Keilp, Kayser, Klim, Butler, Bruder, Gur and Javitt2015). Nevertheless, this early identification needs proper tools that allow their comparison with normally developed children and adolescents. Grosbras et al. (Reference Grosbras, Ross and Belin2018) highlighted the need to define the normal development of emotion perception during childhood and adolescence (Grosbras et al., Reference Grosbras, Ross and Belin2018) due to the scarce normative and psychometric data available for researchers and clinicians to guide their work.

Recognizing others’ emotions involves using different domains, including semantic information, prosody and non-verbal visual cues such as body postures and facial expressions, or a combination of all domains, as tends to be the case (Heberlein & Atkinson, Reference Heberlein and Atkinson2009). However, facial emotion recognition, understood as the ability to accurately identify and label emotional expressions of faces (Ekman & Friesen, Reference Ekman and Friesen2003), has been the focus of interest for most studies in this area (de Gelder et al., Reference de Gelder, de Borst and Watson2015). Static face pictures have also been the most common type of stimuli when assessing emotion recognition, probably due to the difficulties in assessing other domains in which confounding variables could be present (Herba & Phillips, Reference Herba and Phillips2004). Recent literature has shown how relevant it is to integrate other spheres when studying emotion recognition because when they are integrated, emotion identification is faster and more accurate (de Gelder et al., Reference de Gelder, de Borst and Watson2015; van den Stock et al., Reference van den Stock, Righart and de Gelder2007).

With the aim of understanding emotion recognition from an integrative point of view, researchers have increased their interest in emotional domains other than facial expressions. For example, prosody is another important avenue for obtaining emotional information; it includes vocal intonation, stress, and rhythm (McCann et al., Reference McCann, Peppé, Gibbon, O’Hare and Rutherford2007). It most certainly plays an important role in linguistic functions as well as in emotion processing (Lindner & Rosén, Reference Lindner and Rosén2006). The development of emotion recognition through prosody starts early on, and four-year-old infants can already label emotion from unfamiliar voices, an ability that continues to improve between four and eleven years of age (Chronaki et al., Reference Chronaki, Hadwin, Garner, Maurage and Sonuga-Barke2015; Zupan, Reference Zupan2015). During this period, it seems that face processing is more useful than voice processing when recognizing emotions (Kilford et al., Reference Kilford, Garret and Blakemore2016). Thus, development of emotion recognition from prosody occurs later than face processing and reaches an adult level around the age of 15 (Grosbras et al., Reference Grosbras, Ross and Belin2018). Another domain that has received modest interest in recent years is body movements or body cues. Six-and-a-half month old babies can already identify basic emotions from body movement (Hock et al., Reference Hock, Oberst, Jubran, White, Heck and Bhatt2017). A faster improvement of this skill then occurs during early childhood, followed by a period of slower improvement until an adult level is reached at some point in adolescence (Ross et al., Reference Ross, Polson and Grosbras2012). It seems that the trajectory of acquisition of emotion recognition occurs progressively, but it slightly differs if the domain analyzed is face recognition, prosody, or body movements.

Traditionally, emotion recognition research has focused on facial emotion recognition, probably due to the well-established idea that faces are more universal and consistent in emotion recognition than other domains (de Gelder et al., Reference de Gelder, de Borst and Watson2015). Nevertheless, several social contact emotional domains are featured concurrently in our daily lives. Recent studies have shown that adults use information from faces, bodies and voices in an integrative way in order to generate coherent representations of people (de Gelder et al., Reference de Gelder, de Borst and Watson2015). This integration starts soon, as six-and-a half-month old infants already integrate basic emotions from bodies and faces (Hock et al., Reference Hock, Oberst, Jubran, White, Heck and Bhatt2017). Infants benefit from the combination of facial expression and voice while identifying emotions (Gil et al., Reference Gil, Aguert, Bigot, Lacroix and Laval2014), and at the age of 9 their developmental turning point allows them to more efficiently integrate emotional domains when perceiving emotions (Gil et al., Reference Gil, Hattouti and Laval2016). Despite this evidence about our integrated processing of emotion recognition, most of the tools used to measure it are still based on static faces. In addition, resources are scarcer for the specific assessment of children and adolescents. In some countries like Spain there are no validated measures of integrated emotion recognition in children and adolescents.

The Profile of Nonverbal Sensitivity (PONS) Test (Rosenthal et al., Reference Rosenthal, Hall, DiMatteo, Rogers and Archer1979) and the Multimodal Recognition Test (MERT; Bänziger et al., Reference Bänziger, Grandjean and Scherer2009) combine individual and mixed domains in doing several tasks. One limitation of their use in children is their length, 73 and 45 minutes, respectively. They have been validated in adults but there are no available normative data for children or adolescents. One tool that has been designed for minors is the Cambridge Mindreading Face-Voice Battery for Children (CAM-C; Fridenson-Hayo et al., Reference Fridenson-Hayo, Berggren, Lassalle, Tal, Pigat, Bölte, Baron-Cohen and Golan2016). It is an interesting tool that has been validated with children with autism spectrum conditions. It presents face and voice stimuli without integration. The control sample is small (N = 30), which limits its generalization to the whole population.

A brief tool that assesses integrated emotion recognition is the Bell-Lysaker Emotion Recognition Task (BLERT). It was originally designed for adults with psychosis, but its characteristics make it useful for other populations. A recent initiative called the Social Cognition Psychometric Evaluation tested the psychometric properties of social cognition measures in psychosis (Pinkham et al., Reference Pinkham, Penn, Green, Buck, Healey and Harvey2014). According to this study, the BLERT and Hinting tasks were the tools that showed the strongest psychometric properties (Ludwig et al., Reference Ludwig, Pinkham, Harvey, Kelsven and Penn2017). Nevertheless, the BLERT has not been validated in children and none of these scales have an alternate version that allows for longitudinal assessments.

Due to the progressive acquisition of emotion recognition during childhood, adolescence and early adulthood, it seems necessary to have common integrative measures for these stages of life that properly collect data about the developmental trajectory of emotion recognition. Therefore, one of our aims was to translate the BLERT into Spanish and to create an alternate version with the same characteristics. Another aim of this study was to obtain normative data from a representative sample of Spanish children and adolescents.

Method

Participants

The study took place at five primary and secondary schools (one state school and four publicly funded private schools) of a regional area in Spain. Schools belonged to 5 different locations, all in urban areas located in cities with a population of 28,226 to 345,821 and a rent per capita from 14,145 to 16,081. A total of 545 students (250 male and 295 female) volunteered to participate in the study. The school years of participants ranged from year 3 (primary) to the first year of secondary school, corresponding to ages between 8 and 15 years old. There were no exclusion criteria. Data were collected between February 15, 2018 and February 28, 2020. The Ethics Committee of the University of Deusto approved this study (Ref: ETK–11/16–17). Given that there were no students’ names included in the surveys, it was agreed to collect passive consent from parents. Thus, parents were informed and given the option of refusing to allow their child’s participation. No parents opted their children out of the study. Neither participants nor their legal guardians were rewarded in any way (either financially or academically) for their participation.

Measures

Data on age and gender were collected and the Bell-Lysaker Emotion Recognition Task (BLERT) was used (Bell et al., Reference Bell, Brysonb and Lysaker1997). The BLERT is an audiovisual multimodal emotion recognition task that evaluates the capacity of discerning positive, negative and neutral emotions using 21 videos. These emotions include happiness, sadness, fear, disgust, surprise, anger and neutral emotions. Each video appears for 15 seconds, with the same actor repeating one of the three monologues involving work-related topics and providing dynamic facial, prosodic and upper-movement cues. After each vignette the video was paused and the participants’ answers were recorded. The test scores were the total number of correct responses. This test originally showed a good categorical stability (k = 0.94), discriminant validity and very good test-retest reliability (r = .76) (Bell et al., Reference Bell, Brysonb and Lysaker1997).

Procedure

The authors of the BLERT agreed to have the original English version translated into Spanish. A panel of experts in neuropsychology and linguistics analyzed the translation and amended it as required. As the verbal content was intended to be neutral, back translation was not deemed necessary. In the BLERT three different sentences about work-environment issues are performed 7 times, one per emotion, resulting in 21 scenes. Three new sentences with similar structure but related to school-environment issues were produced in order to create an alternate test. This new sentences replicate the original ones in BLERT but in a new context, so for example where it was said “supervisor” we have change it into “professor”. Finally, 42 scenes were recorded, with an actor performing the 6 sentences expressing each of the 7 emotions. Lastly, it was edited in accordance with the same model as the original test. This resulted in two videos of 7´49´´ and 7´38´´ for BLERT and the new alternate test, respectively. Spanish versions were created and named “Bell-Lysaker Emotion Recognition Task-Spanish Version I (BLERT–SI) and Bell-Lysaker Emotion Recognition Task-Spanish Version II (BLERT–SII)”. Participants completed BLERT–SI and BLERT–SII in two different sessions with two weeks’ difference between them. The order of presentation was counterbalanced across visits. The tasks were completed in groups in the participants’ regular schools. Videos were presented on a digital board screen and the audio was played through the classroom speaker system. The psychometric characteristics of each test were calculated taking into account data collected during both visits. When considering both the first and second visits together, a total of 495 participants correctly completed BLERT–SI and 494 completed BLERT–SII. There were four participants who did not complete one of the two versions, and 83 participants who missed some items in BLERT–SI and BLERT–SII, either in Visit 1 or 2. Consequently, 87 participants were eliminated, which resulted in 458 participants that completed both BLERT–SI and BLERT–SII forms. Figure 1 shows the flow diagram with the drop out details.

Figure 1. Flow Diagram of Dropped-Out Participants and Final Sample

Data Analysis

Data by test version were analyzed for normal distribution using the Kolmogorov-Smirnov Test, while internal consistency was tested via Cronbach’s alpha coefficient and Spearman-Brown coefficients using the split-half method. Spearman’s r correlation coefficients were calculated to test the test-retest reliability and the effect of age. Percentile scores were calculated by age. The significance level was set at .05 and two-tailed tests were used. IBM SPSS Statistics for Windows, version 23.0 (IBM Corp., Armonk, NY, USA) was used to analyze data.

Results

Distribution data are shown in detail in Table 1. The normality of the sample was checked and showed a negatively skewed distribution in BLERT–SI and BLERT–SII, although asymmetry was greater in BLERT–SI.

Table 1. Score Distribution of BLERT–SI and BLER-SII

Note. BLERT–SI = Bell Lysaker Emotion Recognition Task-Spanish Version I; BLERT–SII = Bell Lysaker Emotion Recognition Task-Spanish Version II.

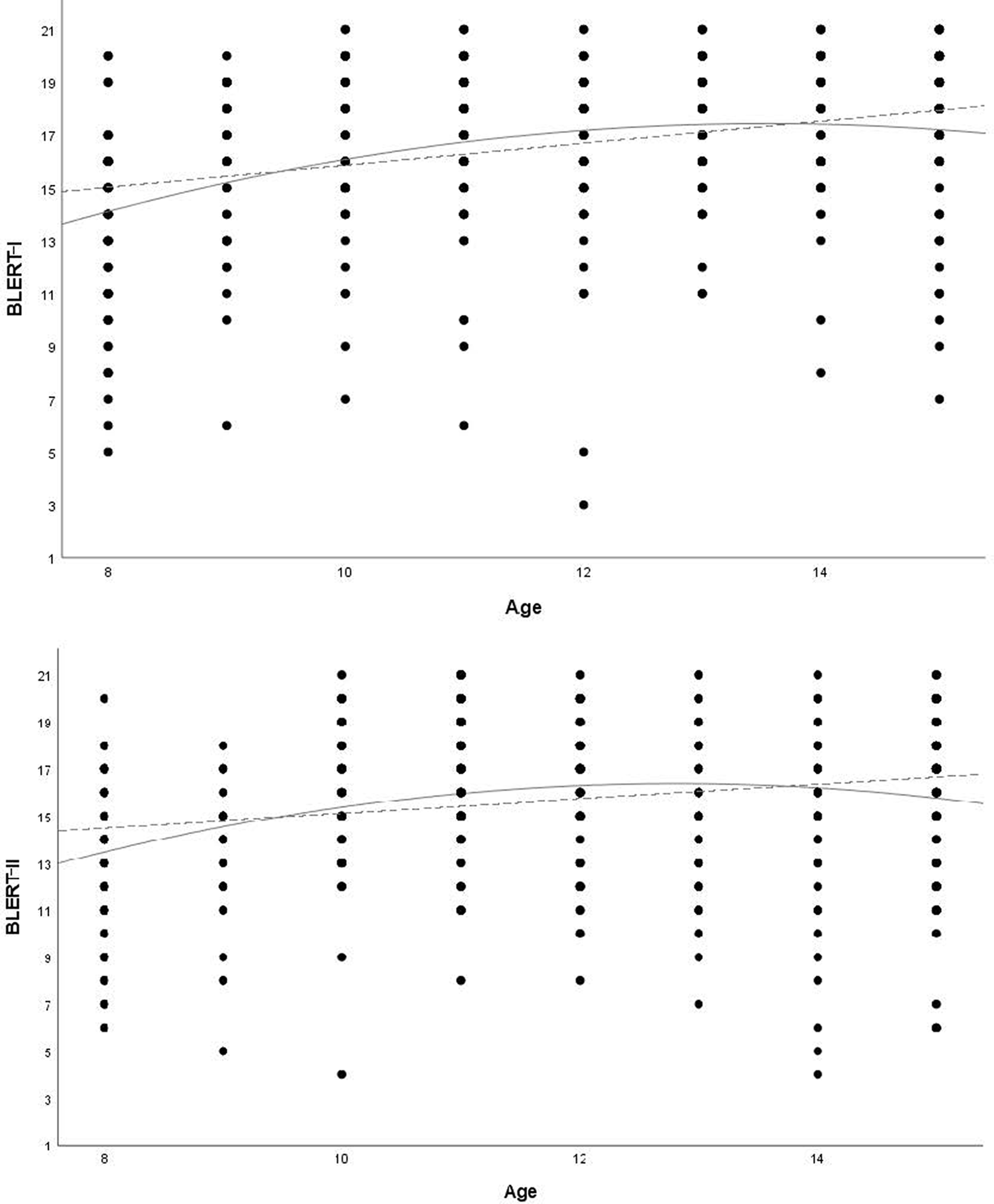

Differences by gender were explored. It was found that performance in BLERT–SI significantly differed between girls (Mdn = 16; Mn = 16.86) and boys (Mdn = 16; Mn = 16.16) (U = 34813, z = 2.671, p = .008, r = .012). Even though the effect size of the difference was small, gender was taken into account as a covariate. There were no statistical gender differences in performance in BLERT–SII (U = 32506.5, z = 1.379, p = .168, r = .062). Age positively correlated with BLERT–SI (r = .312; p < .001) and BLERT–SII (r = .208; p < .001). In Figure 2, scatter plots represent BLERT–SI and BLERT–SII scores by age.

Figure 2. Distribution of BLERT–I and BLERT–II Scores by Age. Comparison of linear and Quadratic Trends

Internal consistency was acceptable for BLERT–SI (α = .700) and BLERT–SII (α = .712). Split-half reliability using the Spearman-Brown coefficient was .651 and .667 for BLERT–SI and BLERT–SII, respectively. Item analysis was tested by the removal of items individually; internal consistencies and means (up to 20) were recalculated (Table 2). Results showed an increase in reliability if Items 2, 4, 5 or 9 in BLERT–SI and Items 1, 6 or 21 in BLERT–SII were removed. But, none of them significantly increased the reliability statistic (χ² = 1.017–(df = 1); p = .076–336) (Diedenhofen & Musch, Reference Diedenhofen and Musch2016). Parallel reliability and test-retest reliability in terms of the longitudinal application of BLERT–SI and BLERT–SII with gender as a covariable showed a moderate correlation (r = .45; p < .001).

Table 2. Mean and Cronbach’s Alpha Validity Index of Total Scale After Removing Each Item in BLERT–SI and BLERT–SII

Note. BLERT–SI = Bell Lysaker Emotion Recognition Task Spanish Version I; BLERT–SII= Bell Lysaker Emotion Recognition Task-Spanish Version II. Cronbach’ s alpha = α.

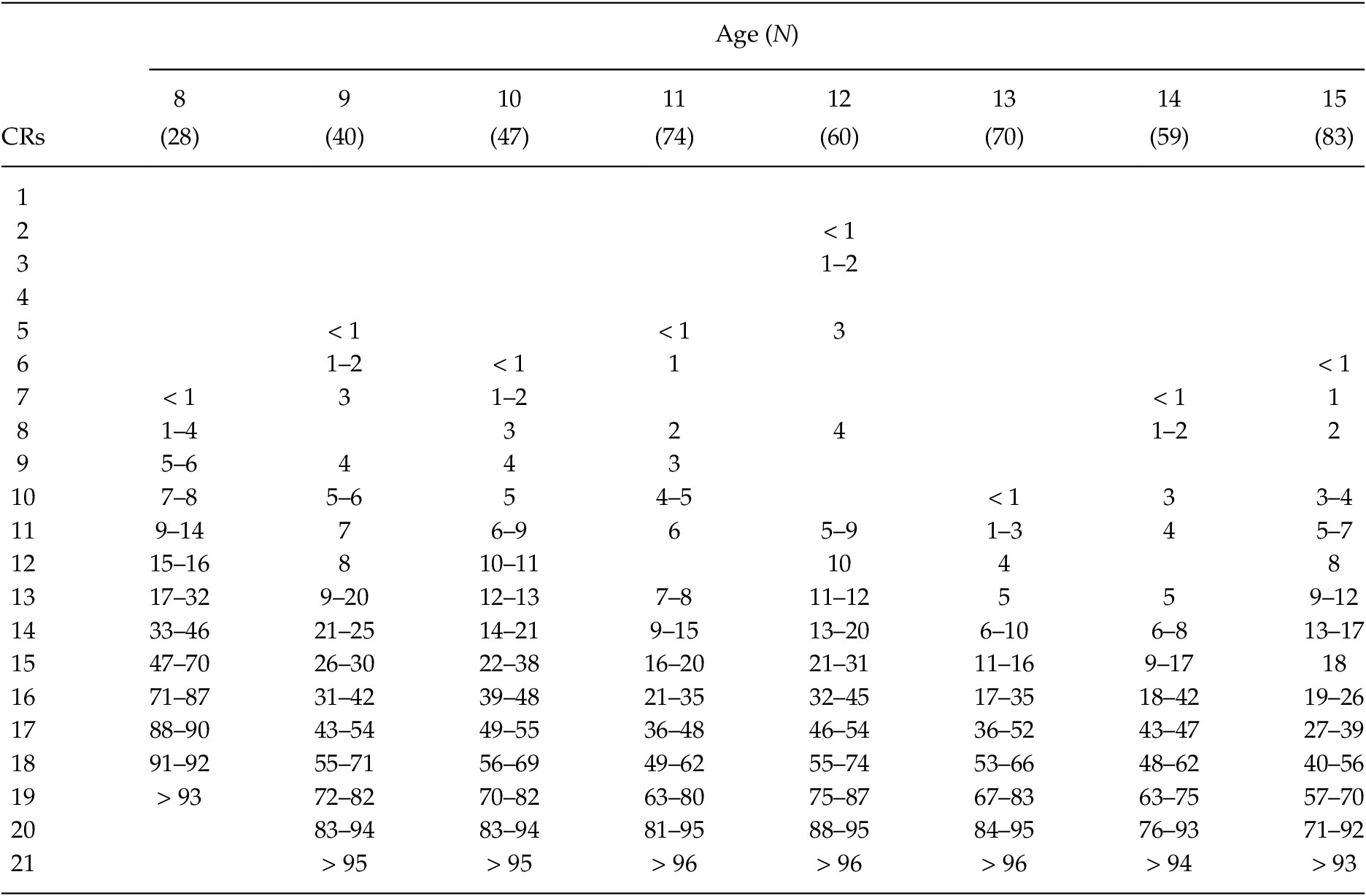

Performances at ceiling level reached 3.8% and 2.6% of the sample for BLERT–SI and BLERT–SII, respectively. Percentage equivalents by age are provided in Table 3 and Table 4.

Table 3. Percentile Correspondence with BLERT–SI Scores

Note. CRs = Number of correct responses in BLERT–SI.

Table 4. Percentile Correspondence with BLERT–SII Scores

Note. CRs = Number of correct responses in BLERT–SII.

Discussion

This study shows psychometric and normative data of an integrative measure of emotion recognition (BLERT–SI) and a new alternate version (BLERT–SII) for the first time in Spanish children and adolescents. These data showed non-linear improvement of performance in BLERT–SI and BLERT–SII in children and adolescents between 8 and 15 years old, indicating a progressive acquisition and development of emotion recognition during this age period.

Most of the tools traditionally used for the assessment of emotion recognition use static pictures of faces as stimuli, such as the well-known Reading the Mind in the Eyes (Baron-Cohen et al., Reference Baron-Cohen, Wheelwright, Hill, Raste and Plumb2001) and The Pictures of Facial Affect designed by Ekman (Ekman, Reference Ekman1976), both of which are used in several new versions and countries (Molinero Caparrós et al., Reference Molinero Caparrós, Bonete, Gómez-Pérez and Calero2015; Vázquez-Campo et al., Reference Vázquez-Campo, Maroño, Lahera, Mateos and García-Caballero2016). Researchers have created new tools with more updated stimuli (color multi-racial images, isolated voices, images that include body expression or a scene) (Gur et al., Reference Gur, Sara, Hagendoorn, Marom, Hughett, Macy, Turner, Bajcsy, Posner and Gur2002; Mayer et al., Reference Mayer, Salovery and Caruso2009; Nowicki & Duke, Reference Nowicki and Duke1994) as an enhancement on the initial black and white images. Tools like the BLERT emerged due to the greater volume of data supporting the need to have tools that integrate more than one dimension. The validation of the BLERT as an integrated emotion recognition scale for Spanish children and adolescents offers a reliable tool with normative data to measure emotion recognition. Moreover, the possibility of using an alternate version allows clinicians and professionals to measure changes over time, which could be crucial in assessing neurodevelopmental disorders and conditions. However, BLERT–SI and BLERT–SII showed moderate test-retest reliability. The normative data included in this study provides a solution to this limitation, since percentile scores could be used instead of raw data. Percentiles can locate each person compared with their population of reference; as a result, it is possible to compare percentiles of BLERT–SI and BLERT–SII in longitudinal assessments. The three sentences used in BLERT–SI were related to work issues. Three new sentences that replicated the message and structure of the original ones were created for BLERT–SII; the only difference was that these sentences revolved around school issues. The slight differences in topics used may partially explain why these two scales seem not to be fully equivalent.

The performance observed in our sample of 8 to 15-year-old children showed a significant improvement with age; nevertheless, improvement effect size was moderate. Previous literature has found nonlinear development of emotion recognition from childhood to adulthood (Grosbras et al., Reference Grosbras, Ross and Belin2018; Ross et al., Reference Ross, Polson and Grosbras2012). Grosbras et al. (Reference Grosbras, Ross and Belin2018) identified a developmental trajectory of emotion perception from voice that showed faster improvement during childhood, followed by a plateau during adolescence. They compared their data with adult data and found that adult performance is accomplished between 14 and 15 years old. The pattern is slightly different when emotion recognition from body movements is analyzed. Ross et al. (Reference Ross, Polson and Grosbras2012) showed that there is strong improvement in emotion recognition from body movement during early childhood up to 8.5 years of age, followed by a slower improvement until adolescence. Our results reported on the possible developmental trajectory when domains are integrated. It seems that from 8 years old, acquisition is slowly progressive, and a plateau stage is apparently reached at 14–15 years old, according to the suggestion of Ross et al. (Reference Ross, Polson and Grosbras2012) about nonlinear acquisition of emotion recognition. However, more data are needed in order to clarify this tendency.

Some limitations should be taken into account regarding the present data. One limitation of this study is related to the lack of exclusion criteria. There was no psychiatric and/or neurological screening, so all students had the opportunity to participate in this study. Nevertheless, the study aimed to show normative data in the general population of children and adolescents. This means that the results may be useful for detecting those children and adolescents that have poor performance, and therefore extensive tools such as the PONS or the CAM-C should be used to assess this population. It can be expected that children with developmental or behavioral issues have low scores in these scales, what can be used as a signal to perform a deeper analysis and assessment in order to detect possible clinical cases such as autism, attention deficit, etc. It would be necessary more studies that compare control and clinical samples in order to stablish cut off scores that would allow the use of these scales as a screening test. It was not possible to collect data about other sociodemographic variables that could have had an impact on the participants’ performance on the test. For example, it has been shown that some life experiences and background such as socioeconomic status (Cooper & Stewart, Reference Cooper and Stewart2021; Manstead, Reference Manstead2018) or adverse experiences (Anda et al., Reference Anda, Felitti, Bremner, Walker, Whitfield, Perry, Dube and Giles2006; Arslan et al., Reference Arslan, Allen and Tanhan2021) can have an influence on people’s social behavior. More studies on the role of these variables in the emotion recognition of children and adolescents are needed.

There are a few limitations in terms of the psychometrics of this study. Firstly, test-retest was obtain by studying how related were the two versions. Nevertheless, test-retest reliability needs to be further studied. And test-retest data for each version must be an objective of future studies in order to know better the scales stability over time. And secondly, other measures could not be included and external or construct validity could not be tested. It is true that we have some data about the construct validity in other populations (Pinkham et al., Reference Pinkham, Penn, Green, Buck, Healey and Harvey2014), but it is necessary to test it in non-adult samples to ensure that this scale is measuring emotion recognition. Future studies should approach this limitations.

The test design presents a limitation regarding gender, since a male actor performed all scenes, and no scenes were performed by a woman. The current design increases homogeneity among stimuli, but limits the control of the male/female faces bias in emotion recognition (Hess et al., Reference Hess, Adams, Grammer and Kleck2009). Nevertheless, in the original test, a single actor performed all the scenes. Since the aim of this study was to replicate the test in Spanish, it was decided not to include any significant changes in test design. Another methodological issue is related to the group assessment and the lack of individual assessments. The fact that data were collected in groups implies that the better use of the present normative data should be in-group assessments. Since emotion processing is progressively introduced in educational plans in some countries like Spain (Real Decreto 126/2014; Ministerio de Educación, Ciencia y Deporte, 2014) and there is increase evidence of it benefit (Di Maggio et al., Reference Di Maggio, Zappulla, Pace and Izard2017), schools could be interested in measuring multimodal emotion recognition in their students. However, individual use is possible if data are interpreted with caution.

Despite the abovementioned limitations, this study offers normative data for a population about whom barely any data had been obtained to compare an individual child’s or adolescent’s performance with the general reference population. The present data are of special importance because, while better performance is generally expected with age, it is not possible to know if that improvement accords with the expected per age/stage unless normative data are available. In addition, data from two different emotion recognition tasks were provided, allowing for longitudinal monitoring of social cognition to be carried out. This decreases the effect of learning on performance due to repetition. These longitudinal follow-ups are particularly important in the child and youth population to ensure proper and reliable comparison can be achieved over time, due to the developmental changes of these age periods.